1.

## 13.3 보스톤 집값 예측

# 데이터 확인

install.packages("neuralnet")

install.packages("MASS")

library(neuralnet)

library(MASS)

{

head(Boston)

tail(Boston)

# 분석 대상 데이터 할당

# crim, zn, chas, nox, rad, mediv

data <- Boston[, c(1,2,4,5,9,14)]

head(data)

}

{

# 결측치 확인

na <- apply(data, 2, is.na)

na

apply(na, 2, sum)

# 데이터 정규화

maxs <- apply(data, 2, max)

maxs

mins <- apply(data, 2, min)

mins

data.scaled <- scale(data, center = mins, scale = maxs - mins)

data.scaled

}

{

# 학습용과 테스트용 데이터 분리

n <- nrow(data.scaled)

n

set.seed(1234)

index <- sample(1:n, round(0.8*n))

index

train <- as.data.frame(data.scaled[index,])

head(train)

test <- as.data.frame(data.scaled[-index,])

head(test)

}

{

# 학습용 입출력 변수 할당

names.col <- colnames(train)

names.col

var.dependent <- names.col[6]

var.dependent

var.independent <- names.col[-6]

var.independent

f.var.independent <- paste(var.independent, collapse = " + ")

f.var.independent

f <- paste(var.dependent, "~", f.var.independent)

f

}

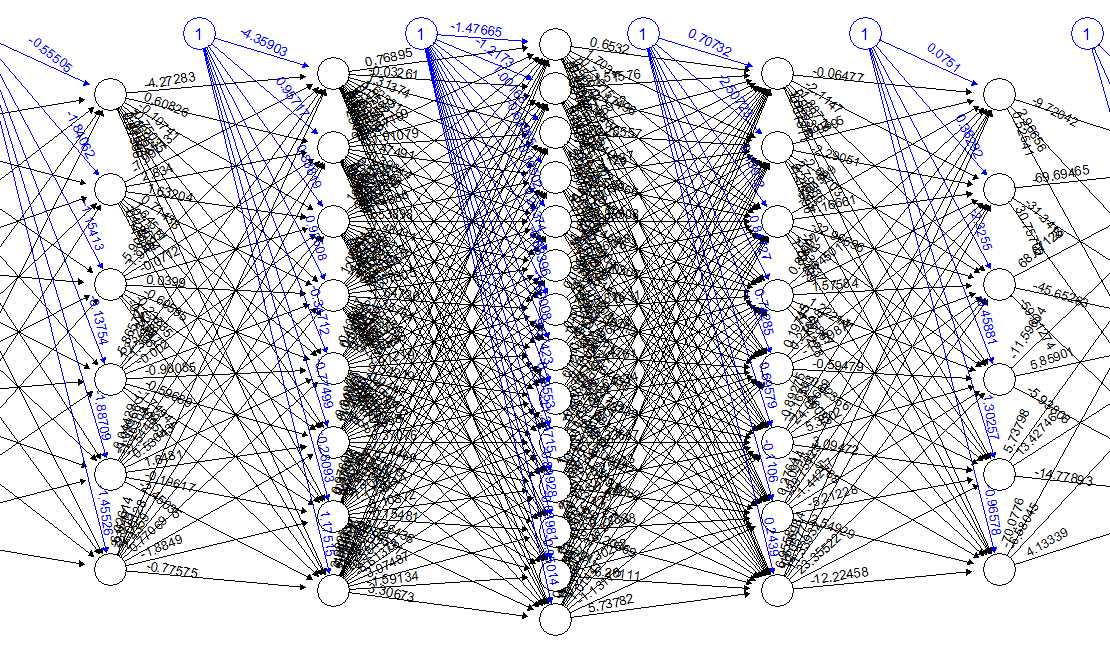

{

# 학습 및 모형 출력

model <- neuralnet(f,

data = train,

hidden = c(5, 3, 3, 2),

linear.output = T)

plot(model)

}

{

# 테스트

predicted <- compute(model, test)

predicted$net.result

predicted.real <- predicted$net.result * (maxs[6] - mins[6]) + mins[6]

predicted.real

test.real <- test$medv * (maxs[6] - mins[6]) + mins[6]

test.real

}

{

# 실제값 대비 예측값 비교(분포)

plot(test.real, predicted.real,

xlim=c(0, 50), ylim=c(0, 50),

main="실제값 대비 예측값 분포",

xlab="실제값", ylab="예측값",

col="red",

pch=18, cex=0.7)

abline(0, 1, col="blue", lty=2)

MAPE.model <- sum(abs(test.real - predicted.real)/test.real * 100) / length(test.real)

MAPE.model

}실행결과

# 데이터 확인

> install.packages("neuralnet")

WARNING: Rtools is required to build R packages but is not currently installed. Please download and install the appropriate version of Rtools before proceeding:

https://cran.rstudio.com/bin/windows/Rtools/

‘C:/Users/PC44069/Documents/R/win-library/4.1’의 위치에 패키지(들)을 설치합니다.

(왜냐하면 ‘lib’가 지정되지 않았기 때문입니다)

‘Deriv’(들)을 또한 설치합니다.

trying URL 'https://cran.rstudio.com/bin/windows/contrib/4.1/Deriv_4.1.3.zip'

Content type 'application/zip' length 148835 bytes (145 KB)

downloaded 145 KB

trying URL 'https://cran.rstudio.com/bin/windows/contrib/4.1/neuralnet_1.44.2.zip'

Content type 'application/zip' length 123926 bytes (121 KB)

downloaded 121 KB

package ‘Deriv’ successfully unpacked and MD5 sums checked

package ‘neuralnet’ successfully unpacked and MD5 sums checked

The downloaded binary packages are in

C:\Users\Public\Documents\ESTsoft\CreatorTemp\Rtmp6rxTuC\downloaded_packages

> install.packages("MASS")

WARNING: Rtools is required to build R packages but is not currently installed. Please download and install the appropriate version of Rtools before proceeding:

https://cran.rstudio.com/bin/windows/Rtools/

‘C:/Users/PC44069/Documents/R/win-library/4.1’의 위치에 패키지(들)을 설치합니다.

(왜냐하면 ‘lib’가 지정되지 않았기 때문입니다)

trying URL 'https://cran.rstudio.com/bin/windows/contrib/4.1/MASS_7.3-57.zip'

Content type 'application/zip' length 1189664 bytes (1.1 MB)

downloaded 1.1 MB

package ‘MASS’ successfully unpacked and MD5 sums checked

The downloaded binary packages are in

C:\Users\Public\Documents\ESTsoft\CreatorTemp\Rtmp6rxTuC\downloaded_packages

> library(neuralnet)

> library(MASS)

> {

+ head(Boston)

+ tail(Boston)

+

+ # 분석 대상 데이터 할당

+ # crim, zn, chas, nox, rad, mediv

+ data <- Boston[, c(1,2,4,5,9,14)]

+ head(data)

+ }

crim zn chas nox rad medv

1 0.00632 18 0 0.538 1 24.0

2 0.02731 0 0 0.469 2 21.6

3 0.02729 0 0 0.469 2 34.7

4 0.03237 0 0 0.458 3 33.4

5 0.06905 0 0 0.458 3 36.2

6 0.02985 0 0 0.458 3 28.7

> {

+ # 결측치 확인

+ na <- apply(data, 2, is.na)

+ na

+ apply(na, 2, sum)

+

+ # 데이터 정규화

+ maxs <- apply(data, 2, max)

+ maxs

+ mins <- apply(data, 2, min)

+ mins

+ data.scaled <- scale(data, center = mins, scale = maxs - mins)

+ data.scaled

+ }

crim zn chas nox rad medv

1 0.000000e+00 0.180 0 0.314814815 0.00000000 0.42222222

2 2.359225e-04 0.000 0 0.172839506 0.04347826 0.36888889

3 2.356977e-04 0.000 0 0.172839506 0.04347826 0.66000000

4 2.927957e-04 0.000 0 0.150205761 0.08695652 0.63111111

5 7.050701e-04 0.000 0 0.150205761 0.08695652 0.69333333

6 2.644715e-04 0.000 0 0.150205761 0.08695652 0.52666667

7 9.213230e-04 0.125 0 0.286008230 0.17391304 0.39777778

8 1.553672e-03 0.125 0 0.286008230 0.17391304 0.49111111

9 2.303251e-03 0.125 0 0.286008230 0.17391304 0.25555556

10 1.840173e-03 0.125 0 0.286008230 0.17391304 0.30888889

11 2.456674e-03 0.125 0 0.286008230 0.17391304 0.22222222

12 1.249299e-03 0.125 0 0.286008230 0.17391304 0.30888889

13 9.830293e-04 0.125 0 0.286008230 0.17391304 0.37111111

14 7.007315e-03 0.000 0 0.314814815 0.13043478 0.34222222

15 7.099481e-03 0.000 0 0.314814815 0.13043478 0.29333333

16 6.980677e-03 0.000 0 0.314814815 0.13043478 0.33111111

17 1.177488e-02 0.000 0 0.314814815 0.13043478 0.40222222

18 8.743184e-03 0.000 0 0.314814815 0.13043478 0.27777778

19 8.951232e-03 0.000 0 0.314814815 0.13043478 0.33777778

20 8.086782e-03 0.000 0 0.314814815 0.13043478 0.29333333

21 1.399878e-02 0.000 0 0.314814815 0.13043478 0.19111111

22 9.505689e-03 0.000 0 0.314814815 0.13043478 0.32444444

23 1.378163e-02 0.000 0 0.314814815 0.13043478 0.22666667

24 1.103868e-02 0.000 0 0.314814815 0.13043478 0.21111111

25 8.361706e-03 0.000 0 0.314814815 0.13043478 0.23555556

26 9.376432e-03 0.000 0 0.314814815 0.13043478 0.19777778

27 7.481071e-03 0.000 0 0.314814815 0.13043478 0.25777778

28 1.067159e-02 0.000 0 0.314814815 0.13043478 0.21777778

29 8.617186e-03 0.000 0 0.314814815 0.13043478 0.29777778

30 1.119626e-02 0.000 0 0.314814815 0.13043478 0.35555556

31 1.263900e-02 0.000 0 0.314814815 0.13043478 0.17111111

32 1.515569e-02 0.000 0 0.314814815 0.13043478 0.21111111

33 1.552964e-02 0.000 0 0.314814815 0.13043478 0.18222222

34 1.287402e-02 0.000 0 0.314814815 0.13043478 0.18000000

35 1.805667e-02 0.000 0 0.314814815 0.13043478 0.18888889

36 6.502201e-04 0.000 0 0.234567901 0.17391304 0.30888889

37 1.024167e-03 0.000 0 0.234567901 0.17391304 0.33333333

38 8.297190e-04 0.000 0 0.234567901 0.17391304 0.35555556

39 1.896485e-03 0.000 0 0.234567901 0.17391304 0.43777778

40 2.395193e-04 0.750 0 0.088477366 0.08695652 0.57333333

41 3.065082e-04 0.750 0 0.088477366 0.08695652 0.66444444

42 1.361360e-03 0.000 0 0.129629630 0.08695652 0.48000000

43 1.519391e-03 0.000 0 0.129629630 0.08695652 0.45111111

44 1.720133e-03 0.000 0 0.129629630 0.08695652 0.43777778

45 1.307971e-03 0.000 0 0.129629630 0.08695652 0.36000000

46 1.855684e-03 0.000 0 0.129629630 0.08695652 0.31777778

47 2.046086e-03 0.000 0 0.129629630 0.08695652 0.33333333

48 2.505904e-03 0.000 0 0.129629630 0.08695652 0.25777778

49 2.782402e-03 0.000 0 0.129629630 0.08695652 0.20888889

50 2.399127e-03 0.000 0 0.129629630 0.08695652 0.32000000

51 9.262685e-04 0.210 0 0.111111111 0.13043478 0.32666667

52 4.164331e-04 0.210 0 0.111111111 0.13043478 0.34444444

53 5.314158e-04 0.210 0 0.111111111 0.13043478 0.44444444

54 4.888171e-04 0.210 0 0.111111111 0.13043478 0.40888889

55 8.182544e-05 0.750 0 0.051440329 0.08695652 0.30888889

56 7.631796e-05 0.900 0 0.037037037 0.17391304 0.67555556

57 1.599418e-04 0.850 0 0.051440329 0.04347826 0.43777778

58 8.991807e-05 1.000 0 0.053497942 0.17391304 0.59111111

59 1.664945e-03 0.250 0 0.139917695 0.30434783 0.40666667

60 1.089807e-03 0.250 0 0.139917695 0.30434783 0.32444444

61 1.607286e-03 0.250 0 0.139917695 0.30434783 0.30444444

62 1.858944e-03 0.250 0 0.139917695 0.30434783 0.24444444

63 1.168373e-03 0.250 0 0.139917695 0.30434783 0.38222222

64 1.350794e-03 0.250 0 0.139917695 0.30434783 0.44444444

65 1.482524e-04 0.175 0 0.063991770 0.08695652 0.62222222

66 3.317977e-04 0.800 0 0.026748971 0.13043478 0.41111111

67 4.211538e-04 0.800 0 0.026748971 0.13043478 0.32000000

68 5.796344e-04 0.125 0 0.049382716 0.13043478 0.37777778

69 1.452402e-03 0.125 0 0.049382716 0.13043478 0.27555556

70 1.369452e-03 0.125 0 0.049382716 0.13043478 0.35333333

71 9.209858e-04 0.000 0 0.057613169 0.13043478 0.42666667

72 1.713389e-03 0.000 0 0.057613169 0.13043478 0.37111111

73 9.589762e-04 0.000 0 0.057613169 0.13043478 0.39555556

74 2.125101e-03 0.000 0 0.057613169 0.13043478 0.40888889

75 8.164561e-04 0.000 0 0.106995885 0.17391304 0.42444444

76 9.980906e-04 0.000 0 0.106995885 0.17391304 0.36444444

77 1.070137e-03 0.000 0 0.106995885 0.17391304 0.33333333

78 9.076105e-04 0.000 0 0.106995885 0.17391304 0.35111111

79 5.635615e-04 0.000 0 0.106995885 0.17391304 0.36000000

80 8.716433e-04 0.000 0 0.106995885 0.17391304 0.34000000

81 3.912560e-04 0.250 0 0.084362140 0.13043478 0.51111111

82 4.304828e-04 0.250 0 0.084362140 0.13043478 0.42000000

83 3.402275e-04 0.250 0 0.084362140 0.13043478 0.44000000

84 3.280886e-04 0.250 0 0.084362140 0.13043478 0.39777778

85 4.975841e-04 0.000 0 0.131687243 0.08695652 0.42000000

86 5.735649e-04 0.000 0 0.131687243 0.08695652 0.48000000

87 5.120834e-04 0.000 0 0.131687243 0.08695652 0.38888889

88 7.327199e-04 0.000 0 0.131687243 0.08695652 0.38222222

89 5.651351e-04 0.000 0 0.213991770 0.04347826 0.41333333

90 5.248967e-04 0.000 0 0.213991770 0.04347826 0.52666667

91 4.554350e-04 0.000 0 0.213991770 0.04347826 0.39111111

92 3.709120e-04 0.000 0 0.213991770 0.04347826 0.37777778

93 4.013718e-04 0.280 0 0.162551440 0.13043478 0.39777778

94 2.521078e-04 0.280 0 0.162551440 0.13043478 0.44444444

95 4.116000e-04 0.280 0 0.162551440 0.13043478 0.34666667

96 1.300665e-03 0.000 0 0.123456790 0.04347826 0.52000000

97 1.221987e-03 0.000 0 0.123456790 0.04347826 0.36444444

98 1.287065e-03 0.000 0 0.123456790 0.04347826 0.74888889

99 8.491638e-04 0.000 0 0.123456790 0.04347826 0.86222222

100 7.000122e-04 0.000 0 0.123456790 0.04347826 0.62666667

101 1.599867e-03 0.000 0 0.277777778 0.17391304 0.50000000

102 1.213894e-03 0.000 0 0.277777778 0.17391304 0.47777778

103 2.500172e-03 0.000 0 0.277777778 0.17391304 0.30222222

104 2.307410e-03 0.000 0 0.277777778 0.17391304 0.31777778

105 1.498035e-03 0.000 0 0.277777778 0.17391304 0.33555556

106 1.419582e-03 0.000 0 0.277777778 0.17391304 0.32222222

107 1.853211e-03 0.000 0 0.277777778 0.17391304 0.32222222

108 1.403284e-03 0.000 0 0.277777778 0.17391304 0.34222222

109 1.367879e-03 0.000 0 0.277777778 0.17391304 0.32888889

110 2.892102e-03 0.000 0 0.277777778 0.17391304 0.32000000

111 1.142072e-03 0.000 0 0.277777778 0.17391304 0.37111111

112 1.062382e-03 0.000 0 0.333333333 0.21739130 0.39555556

113 1.314715e-03 0.000 0 0.333333333 0.21739130 0.30666667

114 2.425540e-03 0.000 0 0.333333333 0.21739130 0.30444444

115 1.528495e-03 0.000 0 0.333333333 0.21739130 0.30000000

116 1.854785e-03 0.000 0 0.333333333 0.21739130 0.29555556

117 1.407892e-03 0.000 0 0.333333333 0.21739130 0.36000000

118 1.625944e-03 0.000 0 0.333333333 0.21739130 0.31555556

119 1.396652e-03 0.000 0 0.333333333 0.21739130 0.34222222

120 1.556032e-03 0.000 0 0.333333333 0.21739130 0.31777778

121 7.043957e-04 0.000 0 0.403292181 0.04347826 0.37777778

122 7.342934e-04 0.000 0 0.403292181 0.04347826 0.34000000

123 9.741499e-04 0.000 0 0.403292181 0.04347826 0.34444444

124 1.619200e-03 0.000 0 0.403292181 0.04347826 0.27333333

125 1.035969e-03 0.000 0 0.403292181 0.04347826 0.30666667

126 1.828709e-03 0.000 0 0.403292181 0.04347826 0.36444444

127 4.282685e-03 0.000 0 0.403292181 0.04347826 0.23777778

128 2.841748e-03 0.000 0 0.491769547 0.13043478 0.24888889

129 3.586719e-03 0.000 0 0.491769547 0.13043478 0.28888889

130 9.834002e-03 0.000 0 0.491769547 0.13043478 0.20666667

131 3.751157e-03 0.000 0 0.491769547 0.13043478 0.31555556

132 1.333732e-02 0.000 0 0.491769547 0.13043478 0.32444444

133 6.560984e-03 0.000 0 0.491769547 0.13043478 0.40000000

134 3.636062e-03 0.000 0 0.491769547 0.13043478 0.29777778

135 1.090088e-02 0.000 0 0.491769547 0.13043478 0.23555556

136 6.198277e-03 0.000 0 0.491769547 0.13043478 0.29111111

137 3.555361e-03 0.000 0 0.491769547 0.13043478 0.27555556

138 3.889069e-03 0.000 0 0.491769547 0.13043478 0.26888889

139 2.736656e-03 0.000 0 0.491769547 0.13043478 0.18444444

140 6.049238e-03 0.000 0 0.491769547 0.13043478 0.28444444

141 3.198611e-03 0.000 0 0.491769547 0.13043478 0.20000000

142 1.823449e-02 0.000 0 0.491769547 0.13043478 0.20888889

143 3.725677e-02 0.000 1 1.000000000 0.17391304 0.18666667

144 4.598275e-02 0.000 0 1.000000000 0.17391304 0.23555556

145 3.117257e-02 0.000 0 1.000000000 0.17391304 0.15111111

146 2.667217e-02 0.000 0 1.000000000 0.17391304 0.19555556

147 2.415121e-02 0.000 0 1.000000000 0.17391304 0.23555556

148 2.655168e-02 0.000 0 1.000000000 0.17391304 0.21333333

149 2.612873e-02 0.000 0 1.000000000 0.17391304 0.28444444

150 3.065813e-02 0.000 0 1.000000000 0.17391304 0.23111111

151 1.854875e-02 0.000 0 1.000000000 0.17391304 0.36666667

152 1.674724e-02 0.000 0 1.000000000 0.17391304 0.32444444

153 1.259145e-02 0.000 1 1.000000000 0.17391304 0.22888889

154 2.408523e-02 0.000 0 1.000000000 0.17391304 0.32000000

155 1.582030e-02 0.000 1 1.000000000 0.17391304 0.26666667

156 3.966162e-02 0.000 1 1.000000000 0.17391304 0.23555556

157 2.742906e-02 0.000 0 1.000000000 0.17391304 0.18000000

158 1.368171e-02 0.000 0 0.452674897 0.17391304 0.80666667

159 1.502216e-02 0.000 0 0.452674897 0.17391304 0.42888889

160 1.594585e-02 0.000 0 1.000000000 0.17391304 0.40666667

161 1.424235e-02 0.000 1 0.452674897 0.17391304 0.48888889

162 1.637678e-02 0.000 0 0.452674897 0.17391304 1.00000000

163 2.054010e-02 0.000 1 0.452674897 0.17391304 1.00000000

164 1.700238e-02 0.000 1 0.452674897 0.17391304 1.00000000

165 2.513255e-02 0.000 0 0.452674897 0.17391304 0.39333333

166 3.279402e-02 0.000 0 0.452674897 0.17391304 0.44444444

[ reached getOption("max.print") -- omitted 340 rows ]

attr(,"scaled:center")

crim zn chas nox rad medv

0.00632 0.00000 0.00000 0.38500 1.00000 5.00000

attr(,"scaled:scale")

crim zn chas nox rad medv

88.96988 100.00000 1.00000 0.48600 23.00000 45.00000

> {

+ # 학습용과 테스트용 데이터 분리

+ n <- nrow(data.scaled)

+ n

+

+ set.seed(1234)

+ index <- sample(1:n, round(0.8*n))

+ index

+

+ train <- as.data.frame(data.scaled[index,])

+ head(train)

+

+ test <- as.data.frame(data.scaled[-index,])

+ head(test)

+ }

crim zn chas nox rad medv

1 0.0000000000 0.180 0 0.3148148 0.00000000 0.4222222

5 0.0007050701 0.000 0 0.1502058 0.08695652 0.6933333

7 0.0009213230 0.125 0 0.2860082 0.17391304 0.3977778

8 0.0015536719 0.125 0 0.2860082 0.17391304 0.4911111

9 0.0023032514 0.125 0 0.2860082 0.17391304 0.2555556

11 0.0024566741 0.125 0 0.2860082 0.17391304 0.2222222

> {

+ # 학습용 입출력 변수 할당

+ names.col <- colnames(train)

+ names.col

+

+ var.dependent <- names.col[6]

+ var.dependent

+

+ var.independent <- names.col[-6]

+ var.independent

+

+ f.var.independent <- paste(var.independent, collapse = " + ")

+ f.var.independent

+

+ f <- paste(var.dependent, "~", f.var.independent)

+ f

+ }

[1] "medv ~ crim + zn + chas + nox + rad"

> {

+ # 학습 및 모형 출력

+ model <- neuralnet(f,

+ data = train,

+ hidden = c(5, 3, 3, 2),

+ linear.output = T)

+ plot(model)

+ }

> {

+ # 테스트

+ predicted <- compute(model, test)

+ predicted$net.result

+

+ predicted.real <- predicted$net.result * (maxs[6] - mins[6]) + mins[6]

+ predicted.real

+

+ test.real <- test$medv * (maxs[6] - mins[6]) + mins[6]

+ test.real

+ }

[1] 24.0 36.2 22.9 27.1 16.5 15.0 15.2 15.6 16.6 14.5 20.0 24.7

[13] 21.2 19.3 16.6 19.7 18.9 35.4 17.4 28.0 23.9 19.8 19.4 22.8

[25] 18.8 20.4 22.0 16.2 18.0 15.6 13.3 15.6 19.4 13.1 50.0 50.0

[37] 23.1 29.6 36.4 33.3 22.6 23.3 21.5 21.7 50.0 46.7 31.7 23.7

[49] 23.7 18.5 29.6 21.9 36.0 43.5 24.4 33.1 35.1 20.1 24.8 22.0

[61] 28.4 22.8 16.1 22.1 17.8 21.0 18.5 19.3 19.8 19.4 19.0 18.7

[73] 22.9 18.6 22.7 23.1 50.0 50.0 13.3 23.2 11.9 15.0 9.5 16.1

[85] 14.3 9.6 17.1 18.4 15.4 14.1 21.4 19.0 13.3 14.6 23.0 23.7

[97] 21.8 21.2 19.1 24.5 22.4

> {

+ # 실제값 대비 예측값 비교(분포)

+ plot(test.real, predicted.real,

+ xlim=c(0, 50), ylim=c(0, 50),

+ main="실제값 대비 예측값 분포",

+ xlab="실제값", ylab="예측값",

+ col="red",

+ pch=18, cex=0.7)

+ abline(0, 1, col="blue", lty=2)

+

+ MAPE.model <- sum(abs(test.real - predicted.real)/test.real * 100) / length(test.real)

+ MAPE.model

+ }

[1] 20.09686

2.

######################################################

# 패키지 설치 및 로딩

# xlsx 패키지를 위해 먼저 JAVA 구축여부 학인(부록 참조)

install.packages("xlsx")

install.packages("nnet")

library(xlsx)

library(nnet)

# 시계열 데이터 읽기

data <- read.xlsx2(file.choose(), 1)

data

data$종가 <- gsub(",", "", data$종가)

data$종가 <- as.numeric(data$종가)

df <- data.frame(일자=data$년.월.일, 종가=data$종가)

df <- df[order(df$일자), ]

df

n <- nrow(df)

n

rownames(df) <- 1:n

# 데이터 정규화

norm <- (df$종가 - min(df$종가)) / (max(df$종가) - min(df$종가)) * 0.9 + 0.05

norm

df <- cbind(df, 종가norm=norm)

df

# 학습 데이터와 테스트 데이터 분리

n80 <- round(n * 0.8, 0)

n80

df.learning <- df[1:n80, ]

df.learning

df.test <- df[(n80+1):n, ]

df.test

# 인공신경망 구조

INPUT_NODES <- 10

HIDDEN_NODES <- 10

OUTPUT_NODES <- 5

ITERATION <- 100

# 입출력 데이터 파일 생성 함수

getDataSet <- function(item, from, to, size) {

dataframe <- NULL

to <- to - size + 1 # 마지막 행의 시작날짜 번호

for(i in from:to) { # 각 행의 날짜 시작번호에 대한 반복

start <- i # 각 행의 시작날짜 번호

end <- start + size - 1 # 각 행의 끝날짜 번호

temp <- item[c(start:end)] # item에서 start~end 구간의 데이터 추출

dataframe <- rbind(dataframe, t(temp)) # t() 함수를 사용하여 열 단위의 데이터를 행으로 전환 후 데이터 프레임에 추가

}

return(dataframe) # 데이터 프레임 반환

}

# 입력 데이터 만들기

in_learning <- getDataSet(df.learning$종가norm, 1, 92, INPUT_NODES)

in_learning

# 목표치 데이터 만들기

out_learning <- getDataSet(df.learning$종가norm, 11, 97, OUTPUT_NODES)

out_learning

# 학습

model <- nnet(in_learning, out_learning, size=HIDDEN_NODES, maxit=ITERATION)

# 입력 데이터 만들기

in_test <- getDataSet(df.test$종가norm, 1, 19, INPUT_NODES)

in_test

# 예측

predicted_values <- predict(model, in_test, type="raw")

Vpredicted <- (predicted_values - 0.05) / 0.9 * (max(df$종가) - min(df$종가)) + min(df$종가)

Vpredicted

# 예측 기간의 실제 값 데이터 만들기

Vreal <- getDataSet(df.test$종가, 11, 24, OUTPUT_NODES)

Vreal

# 오차 계산 및 출력

ERROR <- abs(Vreal - Vpredicted)

MAPE <- rowMeans(ERROR / Vreal) * 100

MAPE

mean(MAPE)

# 예측 입력 데이터 만들기

in_forecasting <- df$종가norm[112:121]

in_forecasting

# 예측

predicted_values <- predict(model, in_forecasting, type="raw")

Vpredicted <- (predicted_values - 0.05) / 0.9 * (max(df$종가) - min(df$종가)) + min(df$종가)

Vpredicted

# 그래프 출력

plot(82:121, df$종가[82:121], xlab="기간", ylab="종가", xlim=c(82,126), ylim=c(1800, 2100), type="o")

lines(122:126, Vpredicted, type="o", col="red")

abline(v=121, col="blue", lty=2)

#####################################################################

## 13.4 붓꽃 종의 분류(분류문제)

# 데이터 확인

install.packages("neuralnet")

library(neuralnet)

iris

data <- iris

# 결측치 확인

na <- apply(data, 2, is.na)

na

apply(na, 2, sum)

# 데이터 정규화

maxs <- apply(data[,1:4], 2, max)

maxs

mins <- apply(data[,1:4], 2, min)

mins

data[,1:4] <- scale(data[,1:4], center = mins, scale = maxs - mins)

data[,1:4]

summary(data)

# 출력 데이터 생성

data$setosa <- ifelse(data$Species == "setosa", 1, 0)

data$virginica <- ifelse(data$Species == "virginica", 1, 0)

data$versicolor <- ifelse(data$Species == "versicolor", 1, 0)

head(data)

# 학습용과 테스트용 데이터 분리

n <- nrow(data)

n

set.seed(2000)

index <- sample(1:n, round(0.8*n))

index

train <- as.data.frame(data[index,])

head(train)

test <- as.data.frame(data[-index,])

head(test)

# 학습용 입출력 데이터

f.var.independent <- "Sepal.Length + Sepal.Width + Petal.Length + Petal.Width"

f.var.dependent <- "setosa + versicolor + virginica"

f <- paste(f.var.dependent, "~", f.var.independent)

f

# 학습 및 모형 출력

nn <- neuralnet(f, data=train, hidden=c(6, 8, 14, 8, 6), linear.output=F)

plot(nn)

# 테스트

predicted <- compute(nn, test[,-5:-8])

predicted$net.result

idx <- max.col(predicted$net.result)

idx

species <- c('setosa', 'versicolor', 'virginica')

predicted <- species[idx]

predicted

# 실제 값 대비 예측 값 비교(분포)

table(test$Species, predicted)

t <- table(test$Species, predicted)

tot <- sum(t)

tot

same <- sum(diag(t))

same

same / tot

######################################################

install.packages("neuralnet")

install.packages("Metrics")

install.packages("MASS")

library(neuralnet)

library(Metrics)

library(MASS)

data("Boston")

data<-Boston

keeps<-c("crim","indus","nox","rm","age","dis","tax","ptratio","lstat","medv")

data<-data[keeps]

data<-scale(data)

apply(data,2,function(x) sum(is.na(x)))

set.seed(2016)

n = nrow(data)

train <- sample(1:n, 400,FALSE)

f<-medv~ crim+indus + nox + rm + age + dis + tax + ptratio + lstat

fit<-neuralnet(f,

data = data[train,],

hidden = c(10,12,20),

algorithm = "rprop+",

err.fct ="sse",

act.fct = "logistic",

threshold = 0.1,

linear.output = TRUE)

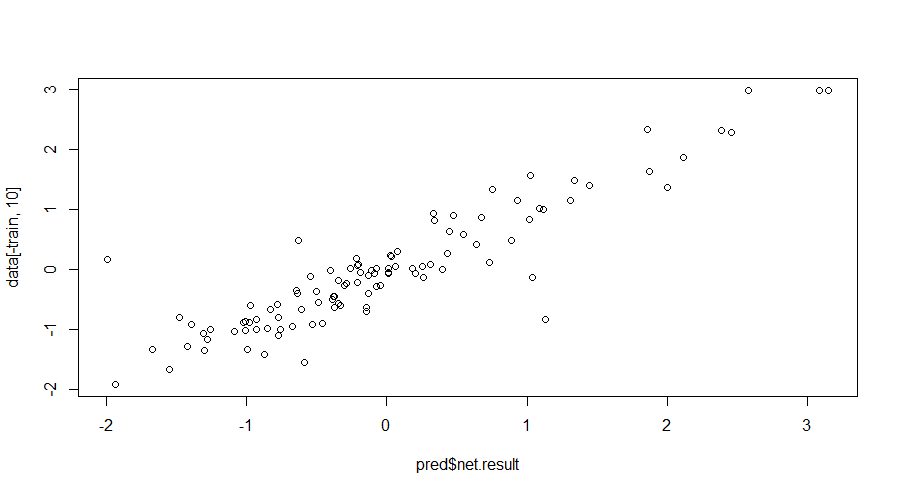

pred<-compute(fit, data[-train, 1:9])

plot(data[-train,10]~pred$net.result)

round(cor(pred$net.result, data[-train,10]),2)

mse(pred$net.result, data[-train,10])

rmse(pred$net.result, data[-train,10])

##############################################

require(deepnet)

train

X = data[train,1:9]

Y = data[train, 10]

fitB <- nn.train(x=X,y=Y,

initW = NULL,

initB = NULL,

hidden = c(10,12,20),

learningrate = 0.58,

momentum = 0.74,

learningrate_scale = 1,

activationfun = "sigm",

output = "linear",

numepochs = 970,

batchsize = 60,

hidden_dropout = 0,

visible_dropout = 0)

predB<-nn.predict(fitB, data[-train,1:9])

round(cor(predB, data[-train,10]),2)

mse(predB, data[-train,10])

rmse(predB, data[-train,10])

#############################

#stepmax=1e6,

#print(

install.packages("neuralnet")

library(neuralnet)

data <- iris

# 결측치 확인

na <- apply(data, 2, is.na)

apply(na, 2, sum)

# 데이터 정규화

maxs <- apply(data[,1:4], 2, max)

mins <- apply(data[,1:4], 2, min)

data[,1:4] <- scale(data[,1:4], center = mins, scale = maxs - mins)

summary(data)

# 출력 데이터 생성

data$setosa <- ifelse(data$Species == "setosa", 1, 0)

data$virginica <- ifelse(data$Species == "virginica", 1, 0)

data$versicolor <- ifelse(data$Species == "versicolor", 1, 0)

head(data)

# 학습용과 테스트용 데이터 분리

n <- nrow(data)

set.seed(2000)

index <- sample(1:n, round(0.8*n))

head(index)

species <- c('setosa', 'versicolor', 'virginica')

train <- as.data.frame(data[index,])

test <- as.data.frame(data[-index,])

# 학습용 입출력 데이터

f.var.independent <- "Sepal.Length + Sepal.Width + Petal.Length + Petal.Width"

f.var.dependent <- "setosa + versicolor + virginica"

f <- paste(f.var.dependent, "~", f.var.independent)

accuracy_k <- NULL

maxCount <- 5

# kk가 1부터 train 행 수까지 증가할 때 (반복문)

for(kk in 1:maxCount){

getIndex1 <- sample(1:maxCount, 1, replace=TRUE)

getIndex1

getIndex2 <- sample(1:maxCount, 1, replace=TRUE)

getIndex2

getIndex3 <- sample(1:maxCount, 1, replace=TRUE)

getIndex3

n <- neuralnet(f, data=train, hidden=c(getIndex1, getIndex2, getIndex3), stepmax=1e6,linear.output=F)

#plot(n)

predicted <- compute(nn, test[,-5:-8])

print(head(predicted$net.result))

idx <- max.col(predicted$net.result)

print(idx)

predicted <- species[idx]

print(predicted)

idx <- max.col(predicted$net.result)

predicted <- species[idx]

print(head(predicted))

# 실제 값 대비 예측 값 비교(분포)

table(test$Species, predicted)

t <- table(test$Species, predicted)

tot <- sum(t)

same <- sum(diag(t))

same / tot

accuracy_k <- c(accuracy_k, (same / tot) * 100)

}

valid_k <- data.frame(k = c(1:maxCount), accuracy = accuracy)

valid_k

# 정확도 그래프 그리기

plot(formula = accuracy ~ k, data = valid_k, type = "o", pch = 20, main = "ANN")예시 일부

library(neuralnet)

> library(Metrics)

Error in library(Metrics) : ‘Metrics’이라고 불리는 패키지가 없습니다

> install.packages("Metrics")

WARNING: Rtools is required to build R packages but is not currently installed. Please download and install the appropriate version of Rtools before proceeding:

https://cran.rstudio.com/bin/windows/Rtools/

‘C:/Users/PC44069/Documents/R/win-library/4.1’의 위치에 패키지(들)을 설치합니다.

(왜냐하면 ‘lib’가 지정되지 않았기 때문입니다)

trying URL 'https://cran.rstudio.com/bin/windows/contrib/4.1/Metrics_0.1.4.zip'

Content type 'application/zip' length 83274 bytes (81 KB)

downloaded 81 KB

package ‘Metrics’ successfully unpacked and MD5 sums checked

The downloaded binary packages are in

C:\Users\Public\Documents\ESTsoft\CreatorTemp\Rtmp6rxTuC\downloaded_packages

> library(MASS)

> library(Metrics)

> data("Boston")

> data<-Boston

> keeps<-c("crim","indus","nox","rm","age","dis","tax","ptratio","lstat","medv")

> data<-data[keeps]

> data<-scale(data)

> apply(data,2,function(x) sum(is.na(x)))

crim indus nox rm age dis tax ptratio

0 0 0 0 0 0 0 0

lstat medv

0 0

> set.seed(2016)

> n = nrow(data)

> train <- sample(1:n, 400,FALSE)

> f<-medv~ crim+indus + nox + rm + age + dis + tax + ptratio + lstat

> fit<-neuralnet(f,

+ data = data[train,],

+ hidden = c(10,12,20),

+ algorithm = "rprop+",

+ err.fct ="sse",

+ act.fct = "logistic",

+ threshold = 0.1,

+ linear.output = TRUE)

> pred<-compute(fit, data[-train, 1:9])

> plot(data[-train,10]~pred$net.result)

> round(cor(pred$net.result, data[-train,10]),2)

[,1]

[1,] 0.91

> mse(pred$net.result, data[-train,10])

[1] 0.1886343

> rmse(pred$net.result, data[-train,10])

[1] 0.4343205

> ##############################################

'R언어' 카테고리의 다른 글

| R언어로 네트워크를 구축해보자 (0) | 2022.05.24 |

|---|---|

| R 스튜디오 테마 바꾸기 (0) | 2022.04.05 |

| R언어 3강 (0) | 2022.03.29 |

| R언어 연산 (0) | 2022.03.22 |